2023年5月17日

开发小记 如何解决Microsoft Azure文字转语音中文文本不能工作的问题

本问题使用Azure官方提供的示例代码可复现:

文本转语音快速入门 – 语音服务 – Azure Cognitive Services | Microsoft Learn

文本转语音 – 真实 AI 语音生成器 | Microsoft Azure

#include <iostream>

#include <stdlib.h>

#include <speechapi_cxx.h>

using namespace Microsoft::CognitiveServices::Speech;

using namespace Microsoft::CognitiveServices::Speech::Audio;

std::string GetEnvironmentVariable(const char* name);

int main()

{

// This example requires environment variables named "SPEECH_KEY" and "SPEECH_REGION"

auto speechKey = GetEnvironmentVariable("SPEECH_KEY");

auto speechRegion = GetEnvironmentVariable("SPEECH_REGION");

if ((size(speechKey) == 0) || (size(speechRegion) == 0)) {

std::cout << "Please set both SPEECH_KEY and SPEECH_REGION environment variables." << std::endl;

return -1;

}

auto speechConfig = SpeechConfig::FromSubscription(speechKey, speechRegion);

// The language of the voice that speaks.

speechConfig->SetSpeechSynthesisVoiceName("en-US-JennyNeural");

auto speechSynthesizer = SpeechSynthesizer::FromConfig(speechConfig);

// Get text from the console and synthesize to the default speaker.

std::cout << "Enter some text that you want to speak >" << std::endl;

std::string text;

getline(std::cin, text);

auto result = speechSynthesizer->SpeakTextAsync(text).get();

// Checks result.

if (result->Reason == ResultReason::SynthesizingAudioCompleted)

{

std::cout << "Speech synthesized to speaker for text [" << text << "]" << std::endl;

}

else if (result->Reason == ResultReason::Canceled)

{

auto cancellation = SpeechSynthesisCancellationDetails::FromResult(result);

std::cout << "CANCELED: Reason=" << (int)cancellation->Reason << std::endl;

if (cancellation->Reason == CancellationReason::Error)

{

std::cout << "CANCELED: ErrorCode=" << (int)cancellation->ErrorCode << std::endl;

std::cout << "CANCELED: ErrorDetails=[" << cancellation->ErrorDetails << "]" << std::endl;

std::cout << "CANCELED: Did you set the speech resource key and region values?" << std::endl;

}

}

std::cout << "Press enter to exit..." << std::endl;

std::cin.get();

}

std::string GetEnvironmentVariable(const char* name)

{

#if defined(_MSC_VER)

size_t requiredSize = 0;

(void)getenv_s(&requiredSize, nullptr, 0, name);

if (requiredSize == 0)

{

return "";

}

auto buffer = std::make_unique<char[]>(requiredSize);

(void)getenv_s(&requiredSize, buffer.get(), requiredSize, name);

return buffer.get();

#else

auto value = getenv(name);

return value ? value : "";

#endif

}我把en-US-JennyNeural改成了zh-CN-XiaochenNeural,毕竟我的目标语言是中文。

让我们编译、链接、运行,程序轻松跑起来。

先尝试一下。这段因为是语音,所以我没法放测试结果,所以我直接给结论:

- 如果输入英文,完全正常。

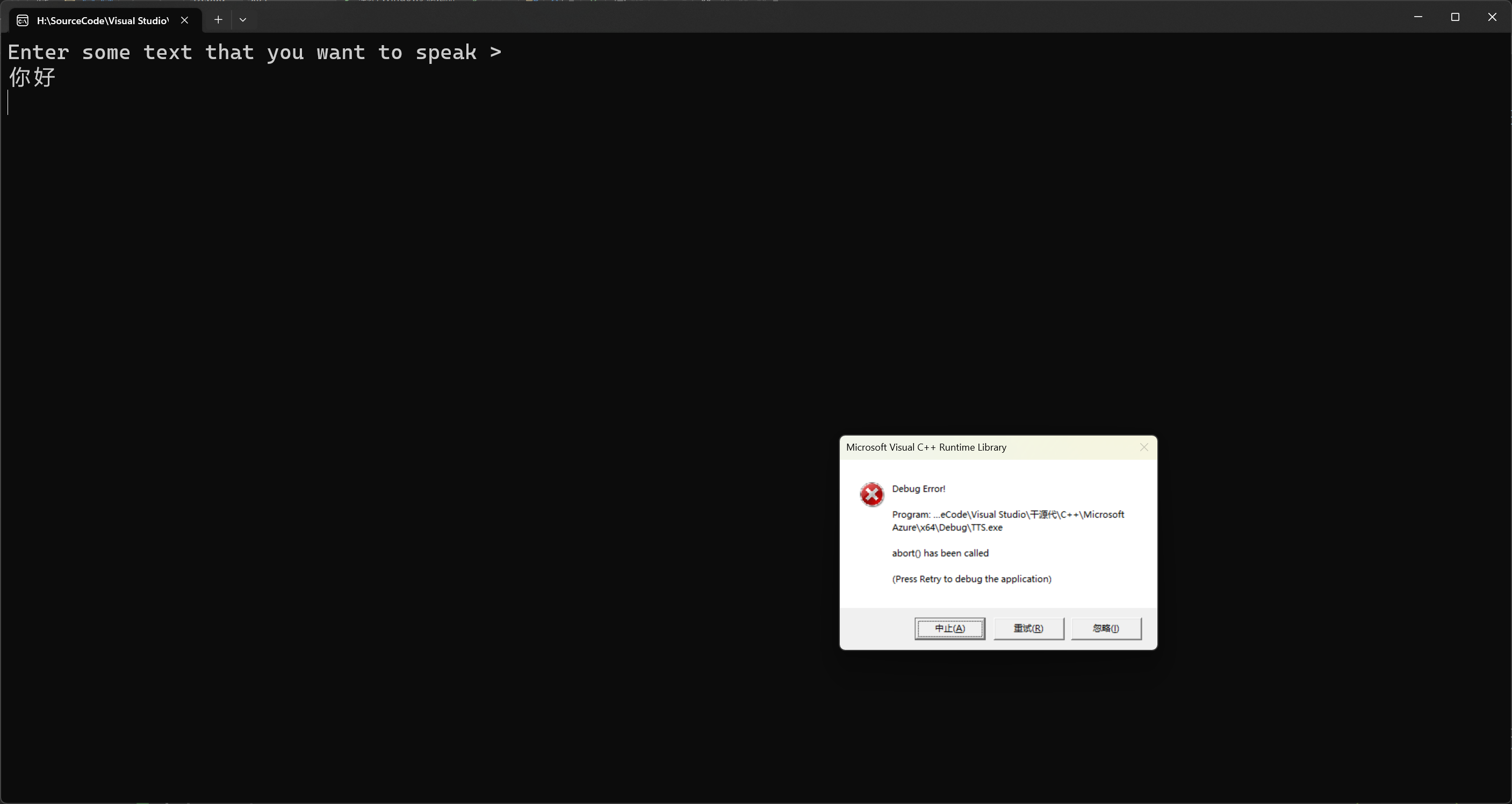

- 如果输入中文,会崩溃,如下:

我不懂,但我拉了一下断点,问题出在auto result = speechSynthesizer->SpeakTextAsync(text).get();

直接给结论:

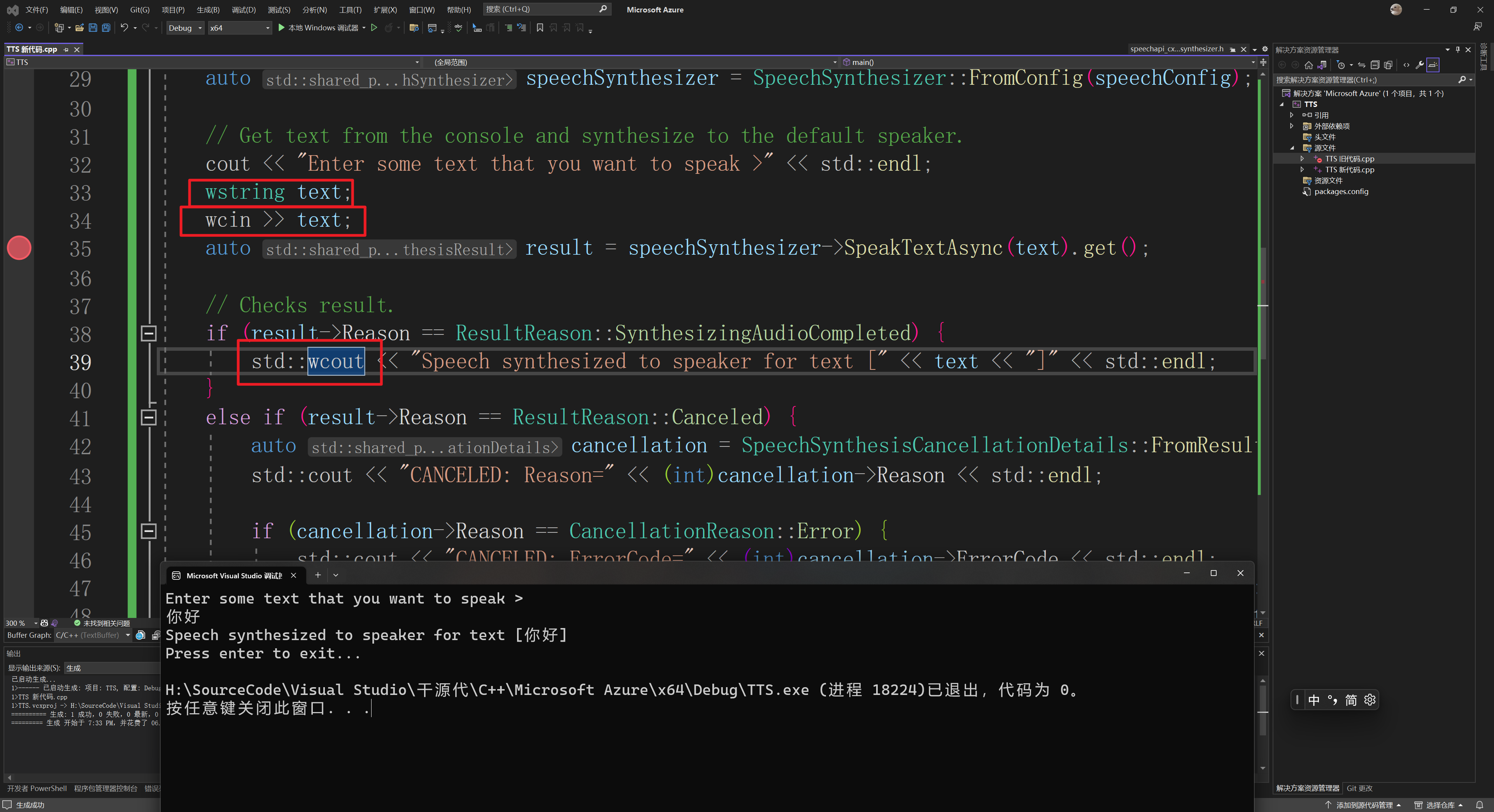

然而,解决了一个问题,又出现了一个新问题。

我用的是TTS,文字转语音,那我的语音呢?妹听到啊!难道我聋了?不至于吧?

同样,我直接给结论,因为我也不是很懂。

如果你不使用wcin,而是直接给text一个值,程序是可以正常工作的,有声音。

比如这样:text = L”你好”;

所以懂了吧?问题出在wcin上。

所以,在wcin之前加一行代码:

wcin.imbue(locale("chs"));提示:上一篇文章中的wcin.imbue(::std::locale(“zh_CN.UTF-8”));用不了,别问为什么,我自己试过的,原因我也不知道。

至此,问题解决,中文TTS输出正常。